Python inside the Cloud

Tl;dr

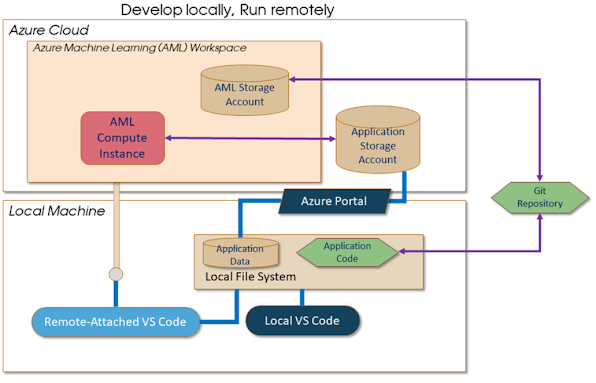

This is a "lean" academic of basics of going for walks your code in Azure. With your facts living in storage along a VM inside the cloud, without exploring the labyrinthine complexity of Azure, and using the newly-released VS-Code "Azure Machine Learning - Remote" extension, programming on the VM is as simple as growing code to your local device, however with the scaling advantages of the Cloud. It's step one to exploiting what cloud computing with Azure Machine Learning(AML) has to provide.

- Cloud services offer many options, Azure more than others; however with such variety it's difficult to recognize where to begin. Let's count on you've already subscribed to Azure, and have started to analyze your way round Azure's net portal, `portal.Azure.Com,`---its conventional graphical interface for setting up and coping with internet assets. The general management time period for Cloud additives, services, and merchandise is "assets." If this isn't acquainted to you, search for a web route in Azure Fundamentals that covers such things as subscriptions, resource agencies, areas, and many others. Harvard's DS intro so you feel at ease using the Azure net portal. Keep in mind most on line education is targeted at agency users, with greater detail than you'll need to realize about to begin. Assumedly you are inquisitive about fast outcomes at affordable value, and the company person's overriding worries additionally include availability, reliability and other "ilities" are not your instant problem---you'll get there eventually. In this educational you may create some Azure ML assets interactively within the Azure Portal, then do development by using a mixture of both VS-Code or command line equipment. I count on you're facile with programming and working on the command line. I'll goal especially our project of moving your Python software to run inside the Cloud, infested with my sturdy personal biases to keep away from commonplace pitfalls, based on my revel in.

- Why would you need to move your code to a machine inside the Cloud? For scale. Azure has a few big machines, significant community bandwidth, and nearly limitless reminiscence. Surprisingly, packages with massive computational masses can be faster on a single machine than on a cluster, and simpler to set up. Of path in case your aim is to run petabytes of information, or teach billion-node neural networks there are appropriate VM-cluster solutions. Either thru cluster or huge VM, it's a extremely good boon for any big scale problem, no longer simplest traditional device mastering packages, but for those large scale MCMC duties, or training on GPUs.

Cloud assets

Cloud services consist essentially of three kinds of assets: compute, storage, and networking. The series of your software assets are detailed a "aid group"---a logical construct for growing, tracking and tearing down a cloud software. Your useful resource group desires to consist of a Machine Learning Workspace, a convenient collection of sources that itself is a form of aid. Find it here within the Azure's graphical interface, portal.Azure.Com:

Go beforehand and create one. The Portal activates are kind enough to provide to create a new resource organization and new component sources for it's Storage account and others. Take the defaults and move ahead and create new factor resources. Just bear in mind the Workspace name, and use the equal useful resource institution name for additional assets you create to your software. If you're making a mistake it is clean to delete the complete useful resource institution and begin once more.

Compute

In simple phrases, compute consists of virtual machines (VMs), from tiny VM photos to VMs with tremendous RAM and GPUs, bigger than whatever you'll ever have at your desk. The Workspace doesn't include any default compute resources, because it offers you a preference of creating person VMs, VM compute clusters, or the usage of your present compute assets. For this tutorial you want to create a "Compute instance"---a unmarried VM---by using choosing a size that meets your need. These Ubuntu VMs created in Azure Machine Learning Studio (AML) are controlled VMs---with maximum all software wished already installed and with updates managed for you. This takes the work of putting in and configuring the VM off your shoulders. Alternatively Azure nonetheless offers legacy "Data Science VMs" that come with vast pre-set up ML equipment, however except for a few special purposes, (consisting of strolling Windows OS) AML Compute Instances can take their area.

Storage

Storage also is available at scale. But not like along with your laptop, primary storage isn't always a file gadget walking on a disk neighborhood to the VM, but a separate factor with an get right of entry to API. One cause is that garage is everlasting, however VMs come and go; start them while you need work performed, then tear them all the way down to shop cash. Storage includes "blobs" with sturdy guarantees of persistence and redundancy. A key factor in this tutorial is demonstrating a way to integrate existing Azure Storage, that could come already loaded along with your records.

Networking

Networking services join compute and garage. Moreover networking within the Cloud includes software-described digital networks that hook up with your nearby "on-premise" device and your cloud sources. With cloud resources, Networking glues additives collectively with combinations of nearby subnets, safety offerings (firewalls), and public-facing IP addresses. For the easy case of a VM with linked garage, the related network looks like your private home network, with a public IP deal with, and NAT-ed addresses on a subnet. You may not need to understand a lot about this, considering the fact that it is set up for you whilst you create a VM. We'll get to that; however the area to begin is storage, and getting your statistics onto it.

Setting up your improvement environment.

The tools you'll want to run your code within the cloud are:

- VS-Code - Microsoft's open supply IDE

- git: Open source model manipulate

And two Azure assets:

- Azure Storage

- An AML Workspace with a Compute Instance.

The name example shows how these are cobbled collectively. An AML Workspace brings collectively an integrated series of sources: You'll want just two: the managed VM, and connected garage wherein it keeps the file machine along with your code. Additionally you'll installation an Azure Storage account, or use an current one in your data. This isn't simply essential, but learning to manage garage is good experience. Resource setup and control can be carried out absolutely inside the Portal. As for code, you move that to AML model it with git. Then you run your edit-run-debug cycle with two copies of VS-Code jogging domestically. The magic is that one copy is attached to the AML compute example making it appear as if it is just any other local instance.

My awareness on using VS-Code for code development, in conjunction with two of its Extensions, the Azure ML Extension, and the Azure ML - Remote Extension. Install Extensions from the VS-Code "Activity Bar", observed in a column on a long way left, that appear to be this:

As an open-source venture, the momentum to enhance and expand VS-Code is well worth noting, as told with the aid of its devoted blog. Hard-core Python builders may also have a preference for different dedicated Python tools consisting of PyCharm or Spyder, however on this tutorial I'm the usage of Microsoft's VS-Code for its fashionable assignment management, symbolic debugging, and Extensions, which integrate well with Azure. For these motives I select it to Microsoft's legacy Visual Studio IDE Tools for Python.

So install VS-Code if you do not have it already.

Besides your Azure subscription, git, and VS-Code you will need the az command line package, referred to as the [Azure command-line interface Azure CLI, and its extension for Azure Machine Learning which paintings equivalently on both Windows or Unix shells. There are installers for it on the website.

The az package deal manages every Azure resource, and its az reference page is a whole catalog of Azure. It's implemented as a wrapper for Azure's RESTful management API. You rarely want to refer to it at once on account that VS-Code Extensions will call into az for you, and provide to put in it if it is lacking. And the Portal exposes the same capability. But each of these are syntactic sugar over command line equipment, and you have to be aware about each.

In the long run, the opposite cause to understand about az is for writing scripts to automate management steps. Every time you create a useful resource inside the Portal, it gives you the option to download a "template". A template is just a description in JSON of the aid you've got created. Using az you can invoke ARM (the Azure Resource Manager) to automate developing it. ARM is a declarative language for resource management that reduces useful resource advent to a unmarried command. Look into the az deployment group create... Command for info.

Commands unique to Azure Machine Learning (AML) in the az extension need to be hooked up one by one. Az will activate you to do this the primary time you operate az ml.

Don't confuse the az CLI with the Azure python SDK that has overlapping capability. Below is an example using the SDK to study from Storage programmatically. Most matters you may do with the az CLI are also exposed as an SDK in the python azureml package deal.

Finally, to test that az is working attempt to authenticate your neighborhood machine your Cloud subscription. Follow the directions while you run

You do this as soon as at the begin. Subsequent az instructions will no longer want authentication.

The Application

Ok, you have your code and information, and established gear. Let's start! Assume you've got long gone ahead and were given a brand new AML Workspace, the stairs are

- Setup Storage,

- Prepare your code,

- Create a compute instance, and

- Launch a far off VS-Code session.

One alternative while you are finished along with your code is to installation it by wrapping it in a "RESTful" API call as an Azure Function Application. This exposes your software as an "endpoint", callable from the Web. Building an Azure Function could be the problem of another academic.

First aspect - Prepare your information. It will pay to maintain your information within the Cloud.

Storage, inclusive of Azure blob storage is cheap, fast and reputedly limitless in ability; it fees about two cents a gigabyte consistent with month, with minor prices for shifting facts round. For each cost and network bandwidth motives you are higher off transferring your records one time to the cloud and no longer jogging your code inside the Cloud in opposition to your statistics stored domestically. In short, you'll create a storage account, then create a box---the equivalent of a hierarchical report device---of "Azure Data Lake Generation 2" (ADL2) blob garage. There are severa options to blob garage, or even more top rate options to run pretty a whole lot any database software program on Azure you may imagine, but for the batch computations you wish to run, the region to start is with ADL2 blob garage.

Blob garage seems as files organized in a hierarchical listing shape. As the name implies, it treats data as simply a chunk of bytes, oblivious to report format. Use csv files if you want, but I endorse you operate a binary layout like parquet for its compression, speed and comprehension of defined facts-types.

Perhaps your data is already within the Cloud. If now not the very best manner to create storage is using the Portal---"create a useful resource", then comply with the prompts. It would not want to be in the same aid organization as your Workspace, the selection is yours. The one important choice you need to make is to pick out

Essentially this is blob storage that supersedes the authentic blob garage that become constructed on a flat report machine. Note that "Azure Data Lake Gen 1" garage isn't always blob storage for reasons now not well worth going into and I will ignore it.

You can upload data interactively using this Portal web page. Here's an instance for a field named "facts-od." It takes a few clicks from the top level storage account page to navigate to the garage box and route to get here. It consists of report and listing manipulation commands just like the computing device "File Explorer."

In the tilt style of this educational, I've included best the absolute minimum of the Byzantine series of features that make up Azure ML. Azure ML has it's personal information storage, or more accurately, thinly wraps current Azure Storage additives as "DataSets," including metadata on your tables, and authenticating to Storage for you, that is quality. However, the underlying storage aid is obtainable in case you hassle to look. This academic---an give up run round those superior capabilities---is right practice even supposing best done once, to understand how Azure ML garage is constituted.

Prepare your code

Going in opposition to the commonplace awareness, I advise you should convert your pocket book code to Python modules when you get beyond preliminary exploratory evaluation. If you are wed to the use of notebooks, VMs paintings simply satisfactory: Both Azure ML workspaces and Spark clusters will let you run notebooks, but you may be restrained going forward in handling, debugging, trying out, and deploying your code. Notebooks are top notch for exploring your facts, but when developing code for subsequent use one get's uninterested in their obstacles:

They don't play properly with `git`. If there are merge conflicts between versions, it's a actual headache to diff them and edit the conflicts.

They get huge once crammed statistics and pics. Either strip them of output earlier than you commit them, to maintain them plausible. Or convert them to html as a sharable, self-documenting record.

As your code grows, all the goodness of modular, debugable item-orientated code is missing. Honestly the symbolic debugger in VS-Code is well worth the attempt of conversion, not to say the manageability of keeping code prepared by using function in separate modules.

Python modules inspire you to construct tests, a necessary practice for reliable extension, reuse, sharing and, productionizing code.

So relegate notebooks to run-as soon as experiments, and sell blocks of code to split files that may be tested and debugged effortlessly.

Jupyter generates a .Py file from a pocket book for you on the command line with

Rely on git

git merits real homage. As your initiatives scale and you collaborate with others ---the matters that make you valuable for your company---git is the comfy domestic for your work. As any SW Engineer knows there is no greater solace and respite from the wrath of your colleagues in case you've "damaged the construct" with the intention to revert to a running state with only a few instructions. Having an documented history is also a gift you supply to posterity. Arguably the complexity of git dwarfs the complexity of a hierarchical file gadget, however there is no shame of counting on Stack Overflow every time you step out of your git comfort area. And agree with me, there is a git command for any achievable assignment.

Assume your code is in a git repository of your preference. Git will be your conveyance to transport code from side to side from cloud to neighborhood filesystems.

Setting up cloud assets - an Azure Machine Learning (AML) Workspace

I anticipate you've got created an AML Workspace. To release the net version.

Simply, Just Python inside the Cloud button once you navigate to the Workspace.

A excursion of the Workspace

The Workspace collects a universe of machine mastering-related features in a single area. I'll simply contact on some that this academic makes use of. However the beauty of the use of VS-Code is that you don't want to head right here: Everything you need to do inside the Studio can be executed from the Azure Remote Extension.

- Compute times Once inside the Workspace pane, on its left-aspect menu, there may be a "compute" menu object, which brings you to a panel to create a new compute instance. Create one. You have a wide choice of sizes - can you assert 64 cores w/128G RAM? Or with four NVIDA Teslas? Otherwise it's fine to just accept the defaults. You can start and stop times to keep cash when they may be now not being used.

- Code modifying Under "notebooks" (a misnomer) there may be a view of the report machine it is connected to the Workspace. It is simply a web-based totally IDE for editing and going for walks code in a pinch. It can encompass notebooks, undeniable python documents, neighborhood git repositories and so on. This document system is hidden in the workspace's related AML garage, so it persists even if compute times are deleted. Any new compute instance you create will share this document device.

- AML storage The Studio does no longer display its connected AML Storage Account, so recall it a black field wherein the far off record gadget and other artifacts are stored. For now paintings with outside Azure storage -- why? So you see how to integrate with different Azure offerings thru garage.

- The brave amongst you may use the Portal to browse the default storage that comes together with your workspace. The storage account may be found by using its call that starts with a munged model of the workspace name observed by way of a random string.

Remember the fine thing is that all this Workspace capability you want is exposed at your computer by VS-Code Extensions.

The development cycle

Let's get to the fun part---how those components paintings collectively and supply a better experience. Notably the neighborhood edit-run-debug cycle for VS-Code is sort of indistinguishable when the usage of cloud resources remotely. Additionally both neighborhood and far off VS-Code times are running simultaneously on your desktop, a far smoother enjoy than walking a far off laptop server ("rdp"), or looking to work remotely absolutely inside the browser. How cool is that!

Let's go through the steps.

Running locally

Authentication to the cloud is controlled with the aid of the Azure ML extension to VS-Code; when you are up and walking and related to your Workspace you'll now not need to authenticate once more.

- Start VS-Code within the neighborhood listing in your `git` repository where VS-Code's `.Vscode` folder resides. (The release.Json record there configures run-debug classes for each file. Learn to feature configurations to it.)

- Once you're glad the code runs locally, use `git` to dedicate and push it. If you commit notebooks, it is better to "clean" them of output first, to hold them manageably-sized.

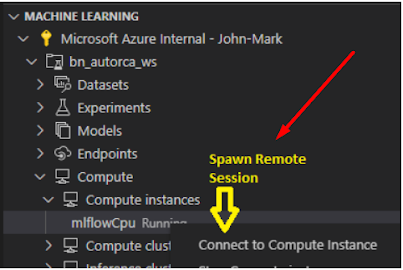

- Start the Azure ML compute instance from the Azure ML extension. First connect to your Workspace using the Azure Icon (see above) inside the VS-Code Activity menu. You can spawn a far flung-connected instance of VS-Code from the nearby VS-Code instance from the properly-hidden right-click on menu object "Connect to Compute Instance":

This will both begin the VM, and spawn a 2d VS-Code example in your desktop related on your far flung Workspace report device!

Running remotely

Note that the terminal instance that appears in the remotely-connected VS-Code terminal window is walking in your VM! No want for setting up a public key for "putty" or ssh to deliver up a faraway shell. Similarly the file system seen in VS-Code is the Workspace report gadget. How cool is that!

First aspect of direction is to deliver the repository in the Workspace report gadget present day by means of the usage of git to pull your current adjustments. Then it's simply edit-run-debug as regular, however with all compute and document access in the cloud.

With each VS-Code times running in your neighborhood machine you could work from side to side between nearby and far off variations simultaneously, transferring code modifications with git.

In the remote example, the Azure ML Extension menus are disabled, certainly. It would not make experience to spawn a "faraway" to a "remote".

When you're executed spin-down the VM to keep cash. Your far flung record gadget is preserved in Storage without you needing to do anything.

If you're sloppy approximately moving files with git (you forgot some thing, or you need to move a secrets and techniques document), note that the far flung-connected instance of VS-Code can view both the cloud or nearby record systems making it a "back door" to move files among them. Advisedly git is used to keep consistency between code inside the file structures, so use this returned door sparingly.

Alternately the far off consultation may be invoked in the Portal. Try establishing a far off VS-Code consultation for your nearby gadget from the AML Studio notebooks pane.

If your compute example is strolling, you can release a neighborhood instance of VS-Code connected remotely to the Workspace's cloud document device (no longer simply the record it truly is open within the Portal editor) and a shell going for walks on the compute instance. But I suppose you'll find it's less difficult to spawn your far flung consultation without delay from your local VS-Code example, than having to visit the Studio pane in Azure ML page in the Portal to release it.

Programmatic adl2 document access

One trade you may want to run your code inside the cloud is to update local document IO.

The primary alternate for your code is to update neighborhood document device IO calls with calls to the python SDK for Blob Storage. This works each when the code runs regionally, or inside the VM as you turn your software to paintings on information within the cloud.

There are numerous ways to access documents for the VM. Here's one way with the Azure python SDK that works with minimal dependencies, albeit it is a lot of code to jot down. Wrap this in a module, then positioned your efforts into the computational mission handy. The python SDK defines numerous classes in exceptional programs. This class, DataLakeServiceClient is precise to adl2 blob garage. Locally you may want to get these applications,

Pyarrow is needed by means of pandas. Apache venture "arrow" is a in-reminiscence facts format that speaks many languages.

In this code snippet, the first feature returns a connection to storage. The 2d function locates the record, retrieves record contents as a byte string, then converts that to a record object that may be read as a Pandas DataFrame. This method works the identical whether or not you reference the default garage that incorporates the Azure ML workspace you created, or some pre-present garage in different parts of the Azure cloud.

You can use the __name__ == '__main__' section to embed check code. It would not get run while you import the file. But as you develop different scripts that use this one, you could invoke this file with the course to a recognized blob to check it hasn't damaged.

The DataLakeServiceClient authenticates to Azure Storage using an "account key" string which you get over the Portal, within the Storage Account "Access keys" pane. An account key's an 88 individual random string that works as a "password" to your garage account. Copy it from this pane:

Since its private it doesn't get embedded in the code, however stays hidden in another document secrets and techniques.Py the nearby listing that incorporates best worldwide variable assignments, in this example AZURE_STORAGE_ACCOUNT, STORAGE_ACCOUNT_KEY, and CONTAINER.

Place the document name on your .Gitignore document, considering it's something you don't want to proportion. This is a easy manner to put into effect protection, and there are several better, but more involved options. You can maintain secrets and techniques in surroundings variables, or maybe better used Azure "key vault" to keep secrets and techniques, or just leave secrets and techniques control up to Azure ML. Granted account keys are a easy answer, in case you control them carefully whilst personally growing code, however you will need to button-down authentication whilst building code for agency structures.

Similarly there are extra involved, "code-lite" ways to retrieve blobs. As cited Azure ML has it's own thin wrapper and a default garage account created for the Workspace in which it's viable to shop your data. But as I've argued, you may need to recognize how to hook up with any Azure statistics, now not simply AML-controlled records. To put it politely, AML's tendency to "simplify" fundamental cloud offerings for records technological know-how would not continually make matters less difficult, and might cover what's underneath the hood that makes for confusion while the "easy" offerings do not do what you need.

Other services, gildings, and next steps

For computational tasks that benefit from even more processing power, the cloud gives clusters for dispensed computing. But earlier than leaping to a cluster for a dispensed processing answer e.G. Dask, bear in mind python's multiprocessing module that gives a easy manner to take gain of a couple of center machines, and gets round python's unmarried threaded architecture. For an embarrassingly parallel undertaking, use the module to run the identical code on multiple datasets, so every challenge runs in it is personal manner and returns a result that the determine method gets. The module import is

The Process elegance spawns an new OS method for each instance and the Manager elegance collects the instance's results.

As for going past one system, the sky is the restriction. Apache Spark clusters seamlessly integrate coding in Python, R, SQL and Scala, to paintings interactively with massive datasets, with gear properly-tailored for information technological know-how. Spark on Azure is available in several flavors, inclusive of 1/3 celebration DataBricks, and database-centric integration of Spark and MSSQL clusters branded "Synapse."

.jpg)

0 Comments